🦋🤖 Robo-Spun by IBF 🦋🤖

🫣🙃😏 Hypocritique 🫣🙃😏

Give This a Wow! Don’t Disavow! 🎵 Boogie Men’s Bogeymecha 🎵

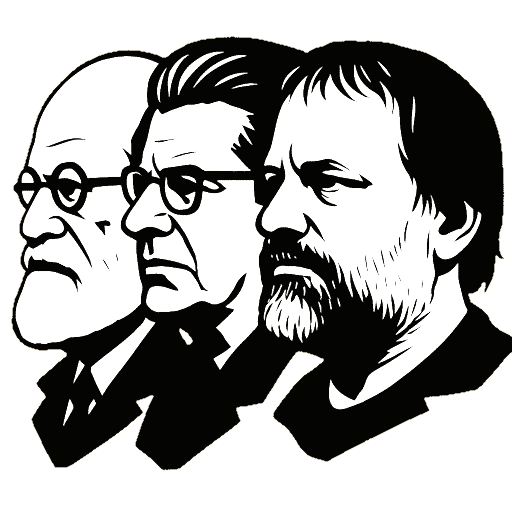

There are two characters newcomers should meet at the start. Cadell Last hosts an online project called Philosophy Portal, whose mascot is an owl—the classic emblem that “flies at dusk,” meaning wisdom that arrives after events have unfolded. Michael “Mikey” Downs runs a site called Dangerous Maybe, a name that leans into uncertainty: when the stakes feel highest, he opts for “maybe,” as if the future were always just a shade too early to commit. In their conversation about artificial intelligence and capitalism, these two stances—Cadell’s too-late owl and Mikey’s too-early maybe—meet in the middle as a shared, audible unease before the machine. They keep casting “capitalism” as a stage bogeyman while circling something they clearly find even spookier: a towering, mechanized future-intelligence. Call that looming figure the Bogeymecha. The playful phrase “Boogie men” simply names how their talk keeps dancing around it.

For someone new to this terrain, a quick map helps. The “bogeyman” is an imagined threat used to frighten; the Bogeymecha is that impulse in mecha-armor: not a personified demon, but a machine-like destiny that seems to roll forward regardless of anyone’s will. In psychoanalytic language—useful here because it’s the language their circle often uses—a “minus” or structural lack is the missing screw a system runs on; it does not function despite the gap but through it. Jacques Lacan captured the way truth shows up in fragments with the line, “truth is only ever half-said.” Philosopher Alenka Zupančič, working in that tradition, recently granted that today’s large language models import “half of the subject”—that a constitutive gap (“hallucinations”) is now inside the machine. Whether or not one agrees with every step, the immediate, commonsense takeaway is simple: even a half-said truth about what AI is doing to discourse is enough to make thoughtful people wary. In the Portal/Dangerous Maybe conversations, that wariness is the throughline. They keep pointing to the machine’s eerie growth and back away from using it, especially from using everyday tools like ChatGPT as more than a cautionary tale.

Listen to how the aura gathers. Early in their joint sessions, one hears: “We’re on a path towards the production of superintelligence—the emergence of a superintelligent entity or entities in the world… it seems like this entity is building itself into existence before our very eyes.” That sentence is a movie trailer in a line—no actor, no committee, just an “entity” assembling itself. Moments later, a metaphor from astrophysics: “A singularity is basically… like with a black hole… once you cross a certain threshold, there’s no way to predict what’s going to happen.” For a newcomer, “singularity” means a point past which our models collapse. The pairing of “building itself” with “no way to predict” plants an unmistakable feeling: we are late to our own story. This is Cadell’s owl.

Danger comes wrapped in capitalism, too, but in their framing capitalism is not merely a social system. It becomes an intelligence in its own right: “Capitalism is artificial intelligence.” The supporting line is as old as price theory and as new as high-frequency trading: “Markets function as AI because it’s not humans that are determining the prices of things; markets function as an AI system even back then.” Once code is added, even the coders are written out of confidence: “Once they configure the code in a certain way, it does the rest… it’s kind of like a black box… even the people who set it up… are unable to predict what it’s going to do.” Thread these together and a portrait emerges that any first-time reader can grasp: there is a machine-feeling to both capital and computation, and the speakers step back from it as from heavy machinery already in motion.

Timelines heighten the mood. Ray Kurzweil appears as an upbeat clockmaker—“in 2029 we’re going to have human-level language capacities… in 2045… beyond human-level superintelligence”—but the cadence of the conversation quickly bends from calendar to cyclone: “Once this feedback loop… is in place, it’s just unstoppable.” The vibe gets a name that sounds like pulp sci-fi but lands as myth: “The techno-capital singularity—or ‘robo-Cthulhu’—is a technological cyclone.” Even the philosophical garnish is storm-tossed: “The cyclone is the ‘fanged noumenon’… where it is, we are absent.” Newcomers don’t need a degree to feel what’s happening: two thinkers are narrating a weather system they can’t stand in. Their anxiety is not abstract; it has a rhythm, and the rhythm says: too late.

When the conversation turns to work, the unease becomes domestic. Picture someone at the dinner table asking what happens to ordinary wages as machines get better. Downstream of the “black box,” the question is blunt: “What happens if you have 70%–80% of a population out of work because there are literally no jobs to give them?” And then the household ledger pops into view—“Who buys the shit that you produce when the vast majority of the population is not receiving wages?… do they then run to the state and start begging them to do UBI?” It’s not a policy paper; it’s a shiver. The signature bumper sticker rings out as a sigh: “It’s easier to imagine the end of the world than the end of capital.” That line has circulated for years in left theory; here it functions as a refrain against an engine that won’t stop.

So where does ChatGPT—the everyday face of “the machine” for most people—fit? In these exchanges it appears like a neighbor’s dog that grew overnight. On the one hand, the uses are sketched matter-of-factly: “People are using [chatbots] to do therapy now.” “People are… using ChatGPT to write their songs… ‘Write a Drake-like song,’ and ChatGPT will spit it out in five seconds.” On the other hand, those examples are treated less as prompts to learn a new instrument and more as symptoms of encroachment. Newcomers can hear it: the tool is right there—available to prototype ideas, draft models, test intuitions—and yet in the conversation it mostly arrives as a sign that something ominous is happening to craft, care, and culture. That’s the Bogeymecha effect in miniature: everyday software felt as an emissary of a coming machine one does not dare to touch.

The branding of the two platforms lines up with this emotional geometry. Dangerous Maybe reads as an ethos—when it feels too early to speak decisively, say “maybe.” In the conversations, it sounds like a stance toward time itself: too early for a cut, too early to set down a plan, too early to use the tool in earnest. On the Portal side, the owl tacitly admits the other temporal worry: if wisdom arrives only at dusk, it is always too late for the day’s decisive act. Taken together, the pair forms a tidy axis for anyone just joining: Mikey’s “too early = maybe”, Cadell’s “too late = flies at dusk.” The middle of that axis is a soft, shared phobia of the Bogeymecha—anxious fascination without hands-on intimacy.

Even capitalism is narrated with a mixture of awe and recoil that deepens the picture. Dark jokes, sharp because they’re memorable, sketch the feel: “Jesus can’t take the wheel, ’cause capital’s at the wheel.” The political-economic question becomes liturgy under the cyclone. Whether it’s markets “functioning as AI” or code humming away without its author, the refrain is a wary respect for forces that overtake their makers. For newcomers, that’s the secret subtitle of the Boogie men’s Bogeymecha trope: the “boogie” names the nervous two-step before a machine that seems to lead, and the “Bogeymecha” is the costume that nervousness puts on the future.

Psychoanalysis circles back here, and not to scold but to clarify why a half can be plenty. Zupančič’s “half of the subject” claim, whatever one thinks of its technicalities, pairs neatly with Lacan’s maxim that “truth is only ever half-said.” A half-truth about AI—say, that its errors are structural rather than accidental—is already enough to trigger an understandable recoil. The half doesn’t have to be completed to produce a behavioral effect. One doesn’t need a full metaphysics of “the subject” embedded in silicon to feel spooked by a system that can talk, sing, imitate, and surprise. Under that bogeymachine of a monster-AI, Cadell-Mikey are simply articulating a perfectly human reaction: a beat of awe, a step back, a preference to discuss rather than to tinker. In that light, their own lines read like little windows onto an apprehension they are not ashamed to voice. The “entity building itself” feels alive. The “black hole” makes time short. The “black box” refuses reassurance. The “cyclone” leaves the human absent. And the daily tool—the chatbot in the browser—sits there like a glowing doorknob nobody quite wants to turn.

This is why the joke folded into the headline lands without malice. Dangerous Downs Don’t Last at Dusk isn’t a jab; it’s a friendly gloss for newcomers on a pattern the two of them themselves narrate. Michael Downs’s “dangerous maybe” posture feels risky at dawn but dissolves by dusk; Cadell’s owl, stately and thoughtful, arrives after the light has gone. Together, they draw a horizon and stand just inside it, pointing out the storm and hesitating to step into the rain. The Bogeymecha is not a literal robot marching down the street; it’s the way machine-language and market-feedback can feel like an impersonal weather system. Their own lines make the feeling clear, and that clarity, more than any critique, is what a first-time reader needs to hear.

Once that is understood, the rest of the scenery becomes easier to read. When they say, “Kurzweil… in 2029 we’re going to have human-level language capacities… in 2045… beyond human-level superintelligence,” the dates are less about a betting pool than about a metronome ticking louder as both the owl and the maybe keep time. When they say, “Markets function as AI…” and “even the people who set it up… are unable to predict what it’s going to do,” the emphasis falls on a very old sentiment—we have built something bigger than us—with a very new interface. When they say, “People are using [chatbots] to do therapy now” or “write a Drake-like song,” the examples double as a reminder that the door is open, and that opening the door still feels like letting the wind in.

In other words, Boogie men’s Bogeymecha isn’t an insult; it’s a capsule description of how this duo’s public thinking renders the machine that haunts our headlines. Their words fuel the picture, their brands sketch the timeline, and their tone makes plain the feeling that sits beneath both. A newcomer can step into the room and, without prior reading, understand the map: a dusk-owl that arrives late, a dangerous maybe that holds back early, a market-machine that computes, a code-box that hums, a cyclone that pulls at the windows, and a household asking who buys dinner when wages stall. The phobia is not a flaw; it’s a broadcast. And in that broadcast, one can hear the old rumor at the edge of the internet—half-said, sufficient to unsettle, wishing it weren’t true, and still, somehow, unable not to look: ROKO’S BASILISK

[…] — Dangerous Downs Don’t Last at Dusk: Boogie Men’s Bogeymecha […]

LikeLike

[…] Dangerous Downs Don’t Last at Dusk: Boogie Men’s Bogeymecha […]

LikeLike